ChatGPT Uses 519ml of Water Per Query: The Real Data on AI Environmental Impact (2025)

Is AI destroying the environment? Research shows infrastructure choices matter 10-25x more than your usage. Here's what writers should know about AI's impact.

TL;DR

Each 100-word ChatGPT query uses 519ml of water (one bottle). But here’s what actually matters: infrastructure choices matter 10-25 times more than your individual usage.

The Real Takeaway

- Don’t feel guilty about using AI. Your 50 daily queries barely register compared to one data center switching cooling systems.

- Do demand better infrastructure. AI companies desperately want writers’ buy-in—we’re the showcase use case. That gives us leverage.

- The actual problem: Infrastructure built badly now = stuck with it for 20-30 years. $64 billion in data center projects already blocked since 2023 due to unsustainable practices.You’re revising your novel’s opening chapter. You ask ChatGPT to punch up the dialogue. A hundred words later, you’ve got three options to consider.

What you don’t see: You just used 519 milliliters of water—the environmental cost of a single AI query. That’s a standard water bottle sitting on your desk right now.

The environmental impact of AI writing tools is real, but online debates miss the point entirely. After three months researching ChatGPT’s water usage, data center energy consumption, and infrastructure sustainability, I found something surprising: Your individual usage barely matters. Infrastructure choices matter 10-25 times more.

Here’s what writers should know about AI’s environmental impact—and what we can do about it.

Table Of Contents

The Infrastructure Truth: Why Cooling Systems Matter More Than Usage

Local Vs. Cloud AI Models Running On Your Computer vs. Data Centers

The Hidden Infastructure: Where Your AI Queries Go

When you hit “Generate” on your writing assistant be it ChatGPT, Claude, Gemini, or any other, your words travel through a network of servers in data centers, of thousands of miles away.

Every AI query consumes resources in three different ways. It needs:

Electricity to run servers. (compute)

Electricity to cool the servers. (air conditioning, fans)

Water to cool the systems (evaporative towers, power plants)

It’s invisible to us because that’s the nature of cloud computing. It uses someone else’s system, somewhere else.

As writers, we think in word count. The AI industry thinks compute. Every time you regenerate a response because the first one wasn’t right, you’re spinning up servers. Every time you paste in your 5,000-word chapter for feedback, you’re using more resources than a simple query.

The Numbers: How Much Water and Energy Does AI Really Use?

Water Consumption: 519ml Per 100-Word Query

According to a 2023 study by researchers at UC Riverside and UC Colorado, each 100-word ChatGPT response uses about 519 milliliters—or one standard bottle of water.

A 1000 word revision would be about 1.4 gallons, or a large soda bottle. If you average about 50 queries a day, that’s enough to fill a large kitchen pot 6-7 times.

Context Check

Before you panic, remember this.

That’s less carbon than a round-trip flight from New York to Chicago, and less water than filling a hot tub once.

But still, isn’t that a lot of water? Depends where the data center is. In water-rich Iowa, it’s a non-issue. In drought-stressed Chile, it’s why Google’s data center project got blocked by courts after a community referendum.

Your individual usage isn’t the problem—it’s where and how the infrastructure runs.

Why Should Writers Care About Environmental Impact

AI destroys the environment.

Real writers don’t need AI and don’t contribute to climate destruction.

The issue I have is the environmental damage it’s doing.

On social media, creatives are constantly attacked for using AI tools. Not gentle criticism—full on moral condemnation.

And honestly, I’m tired seeing the same misconceptions repeated.

The anti-AI side says: ‘Every AI query is environmental destruction, stop using it immediately.’

The pro-AI side says: ‘The environmental impact is negligible, it’s all fearmongering.’

The truth, both sides have it wrong.

The research shows that environmental impact is real but specific—and almost entirely about infrastructure choices, not individual usage.

That distinction matters. A lot.

Why The “Just Don’t Use AI” Argument Doesn’t Work

It Misplaces Responsibility

Telling people not to use generative AI because it hurts the environment is like telling someone to stop using smartphones to stop rare earth minerals. That “solution” ignores where the actual leverage is.

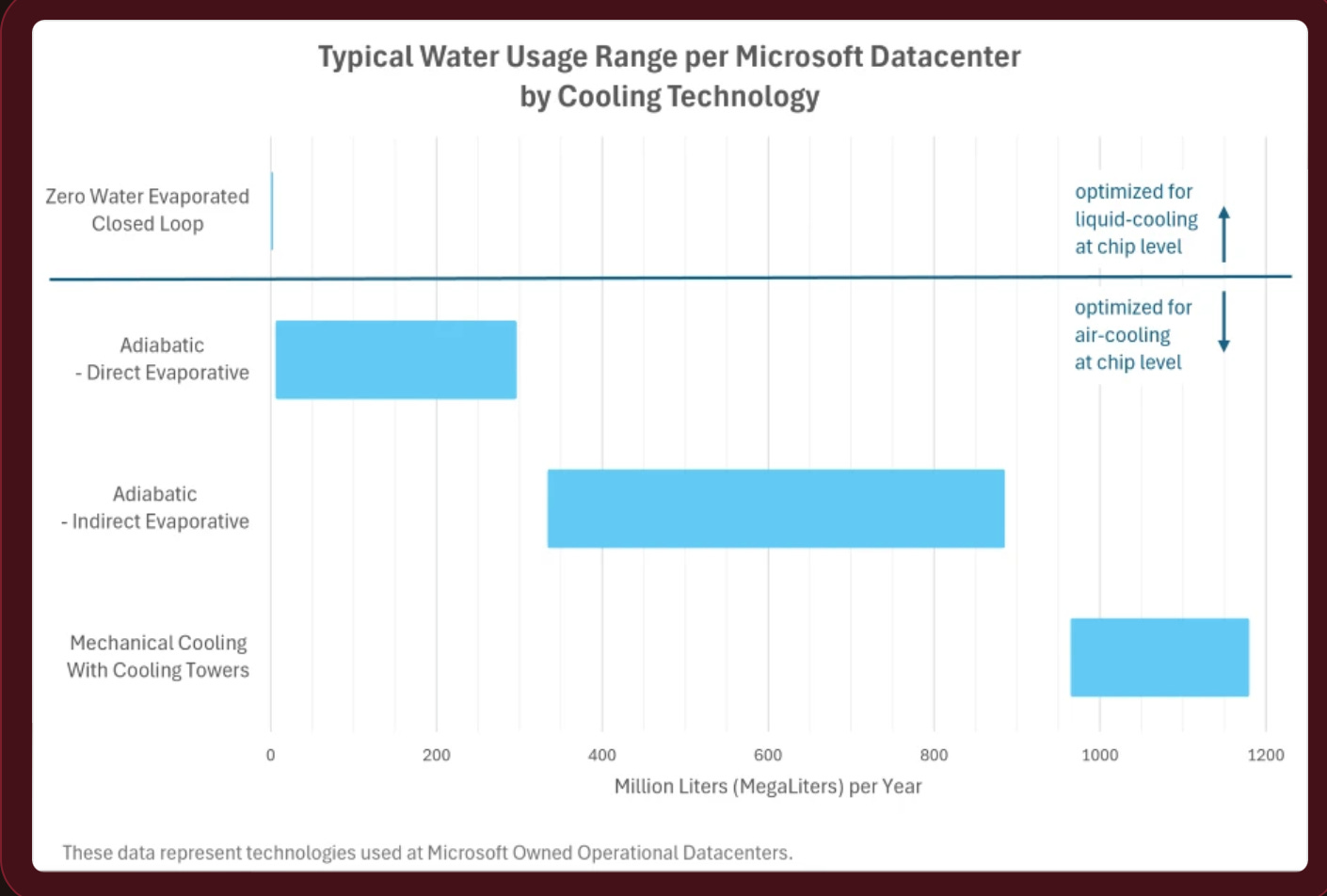

One infrastructure upgrade—like Microsoft’s switch to liquid cooling—can reduce water usage by 31–52%. That single decision has more environmental impact than thousands of writers giving up AI entirely.

When someone says using AI destroys the planet, what they’re really saying is, “I don’t understand how infrastructure works.”

It Equates Usage

A query processed at Google’s facility in Hamina, Finland (seawater cooling, 100% renewable energy, PUE of 1.10) has dramatically different environmental impact than the same one processed at an air-cooled facility in Arizona running on grid power.

Individual use doesn’t determine the impact. The data center’s cooling system does.

So the question isn’t “should I use AI”—it’s “should I demand better infrastructure from the companies providing it.”

It Ignores Relative Impact

The environmental cost of your daily AI writing usage (~62.5 kg CO₂ annually) is:

Less than 1/3 of one round-trip flight from New York to Los Angeles

1/10th of the average American’s annual beef consumption

1/200th of the average American’s annual carbon footprint

Does that mean it’s zero? No. Does it mean writers should feel personally responsible for climate change because they used ChatGPT to punch up dialogue? Also no.

The real environmental problems are:

Coal power plants

Industrial agriculture

Transportation systems

And—relevant to this article—data centers built with air cooling in water-stressed regions

Not your revision queries.

Why I’m Not Saying Ignore The Environmental Cost

Just because individual usage isn’t the problem doesn’t mean we should ignore infrastructure impact. Here are some reasons why I care about this, beyond having a response to online critics:

Writers Have A Self-Interest in Sustainable AI

If AI infrastructure keeps getting built unsustainably, what happens?

Communities block new data centers (already happening: $64 billion in projects blocked since 2023)

Governments impose moratoriums on grid connections (Ireland did this)

Services become more expensive or restricted

Access becomes rationed

Writers who want these tools to remain available and affordable should want them built sustainably. This isn’t altruism—it’s pragmatism.

The Information Vacuum Helps No One

Right now, most public discussion about AI and the environment is:

Anti-AI people saying “it’s all terrible, stop using it”

Pro-AI people saying “it’s fine, stop worrying”

Almost nobody saying “here are the actual numbers and where leverage actually exists”

That information vacuum means writers can’t make informed decisions. We end up either guilt-ridden over queries that don’t matter, or blindly defending infrastructure choices we should be questioning.

We Have Leverage

AI companies are desperate for writers and other artists to embrace their tools. We’re the showcase use case.

That means our feedback matters. If we show that we care, we move the needle toward improvements.

What “Caring About This” Looks Like

After months of research, here’s what I think “caring about AI’s environmental impact” actually means:

NOT THIS:

Feeling guilty every time you use ChatGPT

Counting your queries to stay under some arbitrary limit

Defending yourself against bad-faith online attacks

Avoiding AI tools entirely to be “pure”

THIS:

Understanding what the actual environmental costs are

Knowing which factors matter (infrastructure) vs. which don’t (your query count)

Demanding transparency from providers

Supporting sustainable infrastructure development

Using tools intentionally, not wastefully

Having informed conversations instead of reactive ones

If you’re reading this because someone told you that using AI makes you a bad person or environmental destroyer—they’re wrong. The infrastructure choices of data center operators matter 10-25 times more than your individual usage.

But if you’re reading this thinking ‘great, so I can ignore all environmental concerns’—you’re also wrong. The question isn’t whether to use AI, it’s whether to demand it be built sustainably.

And honestly? That’s a much more productive conversation than the one we’ve been having online.

The Infrastructure Truth: Why Cooling Systems Matter 10x More Than Your Usage

I’ve read tons of data reports from Microsoft, Google, and academic researchers. Here’s what I learned:

Your decision to stop using AI barely makes a difference. But whether a data center uses air or liquid cooling changes environmental impact by 31-52%.

Breaking Down Cooling Systems

Air Cooling: The Least Efficient Option

How it works: Giant fans blow air over servers, like putting 10,000 laptops in a room with industrial HVAC

Noise: 85-100+ decibels (standing next to a running lawn mower, 24/7)

Energy: PUE of 1.3-1.5 (for every 1 watt computing, waste 0.3-0.5 watts on cooling)

Water: Actually minimal for air cooling itself, but massive electricity demand

Why it exists: Cheapest to build

PUE (Power Usage Effectiveness) is like your car’s gas mileage. A PUE of 1.5 means that for every dollar you spend computing, you’re burning 50 extra cents just to keep the servers cool. Terrible efficiency: but cheap to build.

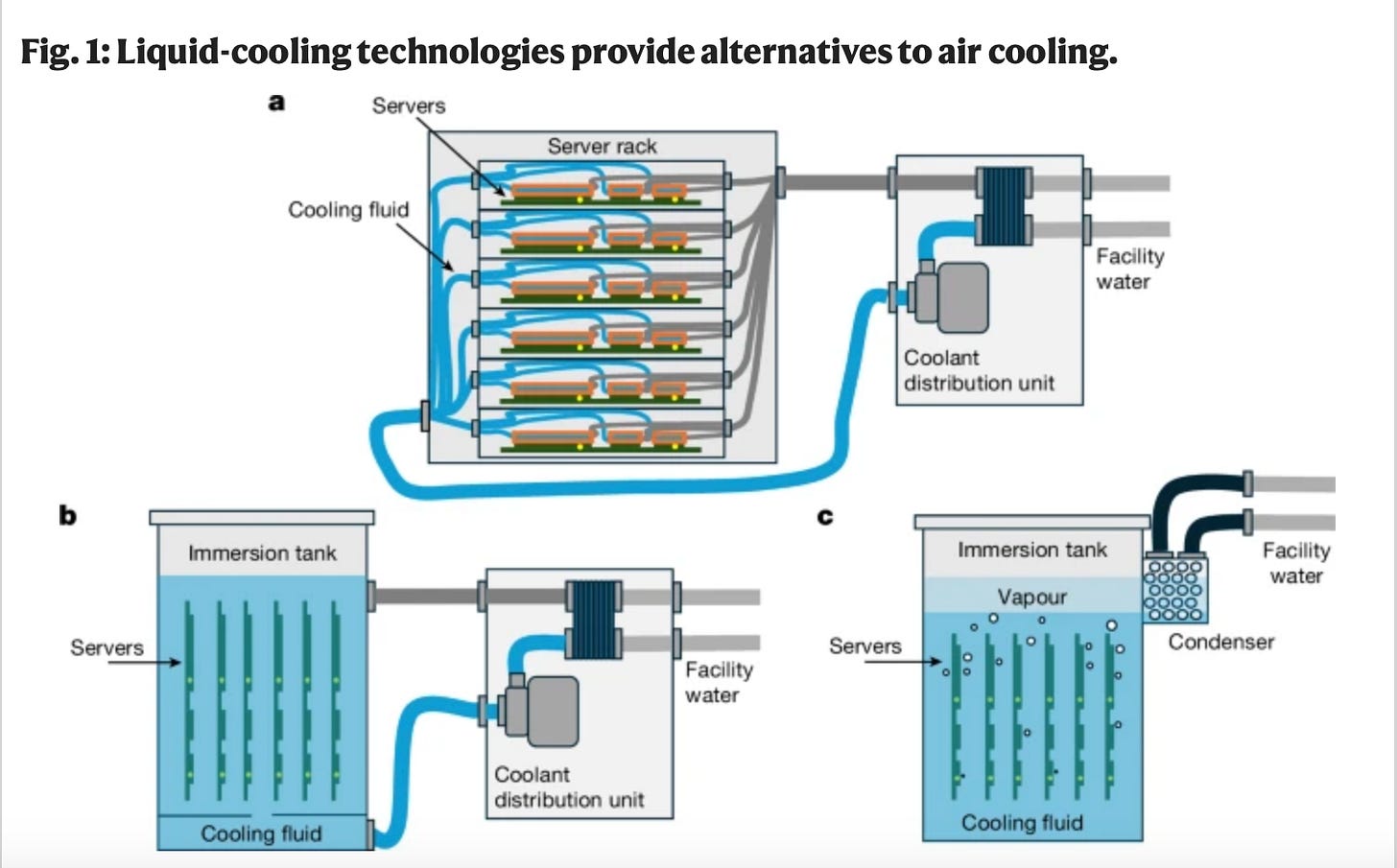

Closed-Loop Water Cooling: 20-30% Better

How it works: Water circulates through pipes, absorbs heat, gets cooled in towers, repeats (like a car’s radiator system but building-sized)

Noise: 55-75 decibels (loud conversation level)

Energy: PUE of 1.2-1.3 (20-30% more efficient—so instead of 50 cents on cooling, it’s 30 cents)

Water: Only 5% loss annually (closed loop = reuses the same water over and over)

Why it’s better: Much quieter, wastes less energy

Cold Plate Liquid Cooling: Best Currently Available

How it works: Liquid flows directly onto server chips (like gaming PC cooling, but warehouse-scale)

Noise: Much quieter (mostly internal pumps)

Energy: PUE of 1.15–1.25

Water: 31–52% reduction vs. air cooling

Carbon: 15–21% lower GHG emissions

Why it’s not universal: ~30% higher upfront cost

Immersion Cooling: The Future of Data Centers

How it works: Servers literally submerged in non-conductive liquid (like mineral oil)

Noise: Nearly silent (no fans needed)

Energy: PUE of 1.05-1.15 (only 5-15 cents wasted per dollar—most efficient)

Water: 30-50% reduction compared to air cooling

Why it’s not common yet: Expensive to implement, and some fluids (PFAS-based) face environmental restrictions

The Microsoft Study

Microsoft researchers conducted a peer-reviewed lifecycle assessment comparing air, cold plate, and immersion cooling. Over 10 years:

Water: 31–52% less

Energy: 15–20% less

Carbon: 15–21% lower emissions

That’s the difference between cooling technologies—what the data center chooses, not what you choose. Compare that to using ChatGPT 10% less often—you’d save maybe 2% water, 2% energy.

Infrastructure choices matter 10–25x more than individual usage patterns.

Local vs. Cloud AI: Running Models on Your Computer vs. Data Centers

Here’s the thing almost no one talks about: You can run AI models on your own computer.

They’re smaller, less capable than GPT-4, but for many writing tasks—grammar checking, style suggestions, brainstorming—they’re plenty good. So when does local make sense versus cloud?

LOCAL (Running on Your Device)

How It Works

Download a model once (like installing software)

Runs on your laptop/desktop GPU or CPU

No internet needed after initial download

Common tools: LM Studio, Ollama, LocalAI

Environmental Cost

One-time download: ~4–7 GB (like downloading 2–3 movies)

Electricity: Whatever your laptop already uses

Water: Zero (your laptop has a fan, not a cooling tower)

Carbon: Minimal (your local electricity grid, which you’re already using)

Practical Reality for Writers

✅ Great for: Grammar/style checking, brainstorming, outlining, character name generation

✅ Privacy bonus: Your draft never leaves your device

✅ Works offline: Write in coffee shops, planes, no connectivity needed

❌ Limited by your hardware: Slower on older computers, can’t run largest models

❌ Less sophisticated: Won’t match GPT-4’s nuanced understanding

❌ Upfront learning curve: Requires setup, not as polished as ChatGPT interface

Let’s say you set up a local model once for your writing style. That setup costs you two hours of electricity—about 0.3 kWh or about 30 cents and 0.15 kg CO2. Then you use it for a year.

Compare that to cloud: 50 queries/day for a year = 125 kWh and 62.5 kg CO₂.

Local wins by over 400x on carbon, and uses literally zero water.

BUT (and this is important) that math only works if:

You actually run it for a full year (setup cost gets spread out)

The local model can do what you need

You’re not just using it to generate garbage (bad writing wastes your own time)

CLOUD (ChatGPT, Claude, Gemini, etc.)

How It Works

Your query goes to a data center

Massive servers process it

Response comes back

You pay in subscription fees or environmental cost distributed across millions of users

Environmental Cost (Per Published Studies)

Per query: ~519ml water, ~0.01 kWh electricity, ~0.005 kg CO₂

Scales with usage: More queries = more cost

Infrastructure matters: Air cooling vs. liquid cooling can change impact by 30–50%

Practical Reality for Writers

✅ Most sophisticated: GPT-4, Claude 3.5 understand context better

✅ Zero setup: Open browser, start typing

✅ Always improving: Updates happen automatically

✅ No hardware requirements: Works on any device

❌ Ongoing cost: Financial (subscription) and environmental (per query)

❌ Privacy concerns: Your work goes to company servers

❌ Requires internet: No offline work

When Cloud Makes Environmental Sense

If you use AI occasionally—a few times a week, not daily—cloud is probably better environmentally.

Why? Because building and disposing of a powerful enough computer to run local models well has its own environmental cost.

According to lifecycle analyses, the carbon footprint of manufacturing a high-end GPU (like NVIDIA’s H100 or RTX 4090) can range from 100–300 kg CO₂, depending on fabrication, materials, and shipping.

That’s equivalent to 5+ years of moderate cloud usage.

7 Myths About AI and the Environment (Debunked with Data)

Months of research taught me something frustrating: Most of what people argue about online regarding AI and the environment is based on misunderstandings.

Here are the myths I kept encountering, the truth, and why the distinction matters.

Myth #1: “AI Data Centers” Only Power Generative AI

What People Believe

“AI Data Center.” It sounds like specific buildings running ChatGPT, separate from the rest of the internet.

What’s True

Data centers run everything that happens “in the cloud”:

Netflix streaming

Google searches (traditional, not AI)

Email (Gmail, Outlook)

Cloud storage (Google Drive, Dropbox, iCloud)

Social media feeds

Video calls (Zoom, Teams)

Banking apps

Weather apps

Literally every app on your phone that stores data remotely

And generative AI

Why This Myth Matters

The term “AI Data Center” is mostly marketing and media shorthand. Some facilities are optimized for AI workloads, but they’re of the same infrastructure powering everything we do online.

Traditional data centers support general computing, while AI data centers are built for high-performance AI workloads. As AI adoption grows, more organizations are transitioning to AI-optimized infrastructure to stay competitive.

Myth #2: Training Models vs. Using Them Is About The Same

What People Believe

Training GPT-3 used as much electricity as 120 homes for a year, therefore every time you use ChatGPT you’re using that much power.

What’s True

Training GPT-3 (One Time, 2020)

Energy used: ~1,287 MWh

Equivalent to: Powering 120 U.S. homes for a full year

Done once: Model exists and is reused indefinitely

Energy used: ~0.0003–0.0005 kWh (GPT-3 vs GPT-4)

Equivalent to: Powering one LED lightbulb for 1 hour

Multiplied by billions: Global usage adds up, but per-query cost is tiny

Why This Myth Matters

You’d need to run 128,700,000 queries to equal the energy of training GPT-3 once. If you did 50 queries per day, that would take you 7,054 years.

For perspective: 7,054 years ago, humans were just beginning to farm.

Myth #3: AI Uses Water Directly (It Doesn’t)

What People Believe

AI consumes water, just guzzle it and it’s gone forever.

What’s True

Data centers use water for cooling, not computing. A breakdown of what happens:

Servers generate heat (from electricity)

Heat needs to be removed or servers overheat and fail

In water-cooled facilities:

Water circulates through cooling systems

Water absorbs heat from servers

Hot water goes to cooling tower

Cooling tower evaporates some water to release heat (this is the ‘consumption’)

Cooled water returns to servers

The water that “disappears” is evaporated water.

Why This Myth Matters

When I say ‘one query uses 519ml of water,’ that means:

519ml evaporates from the cooling tower

That water is no longer liquid water in the local system

It’s now water vapor in the atmosphere

Eventually it will rain somewhere (that’s how water cycles work)

If you’re evaporating millions of gallons in a drought-stressed region like Chile or Arizona, you’re removing liquid water from an ecosystem that desperately needs it. The water isn’t destroyed, but it’s no longer available locally.

The type of cooling system used by a data center makes a difference.

Myth #4: Individual AI Usage Doesn’t Matter At All

What People Believe

‘Great, so nothing I do matters, I can use AI however I want without thinking about it.’

What’s True

This is a tricky one because there’s truth from multiple persepectives.

TRUE: Individual queries have small environmental impact vs. infrastructure decisions.

ALSO TRUE: Collective usage patterns determine the type of infrastructure

ALSO TRUE: Wasteful AI usage is still wasteful

Think of it like voting. Your one vote has a small impact, but collective patterns determine the election. Your usage is the vote for types of infrastructure built.

Why This Myth Matters

Using AI to revise your chapter opening? Reasonable use.

Using AI to generate content you’ll immediately delete? Waste.

Using AI to procrastinate writing? Waste.

The environmental cost is the same, but waste compounds—you burn resources AND make no progress.

Myth #5: AI Will Consume All Electricity by 2030

What People Believe

“The wave of AI infrastructure spending will require $2 trillion in annual AI revenue by 2030. By comparison, that is more than the combined 2024 revenue of Amazon , Apple , Alphabet , Microsoft, Meta and Nvidia , and more than five times the size of the entire global subscription software market.”

What’s True

These projections usually:

Based on current growth rates continuing exponentially forever (they won’t)

Assume zero efficiency improvements (there will be improvements)

Ignore physical and economic constraints

Extrapolating worst-case scenarios as if they’re inevitable

Growth projections are unreliable. Think about previous technology panics:

1990s: ‘The internet will consume all electricity’ (didn’t happen—efficiency improved)

2000s: ‘Cryptocurrency will consume all electricity’ (growth slowed, efficiency improved, regulation intervened)

2010s: ‘Data centers will consume all electricity’ (efficiency improved faster than usage grew)

Current Numbers

Data centers use about 1.5% of global electricity with AI workloads being 10-15% of that percentage

Data centers may reach 3-4% of global electricity by 2030 with AI workloads rising to .05-1%

Why This Myth Matters

This is real growth, but it’s not “AI is consuming all electricity”. Framing matters. Much of data center energy goes services like cloud computing and streaming.

Myth #6: Renewable Energy Makes Data Centers Carbon-Neutral

What People Believe

Google runs on 100% renewable energy, so my queries are carbon-free!

What’s True

Energy Matching (What Most Companies Do)

Data center uses X electricity from the grid (which includes fossil fuels)

Company buys X renewable energy credits (RECs) or funds solar/wind projects

Over the year, the numbers balance out

But: Your 2AM query might still run on coal-fired electricity

Google previously claimed ‘100% renewable’ through annual matching since 2017, but switched to reporting actual hourly carbon-free energy—achieving 66% in 2024—because annual matching didn’t reflect reality.

24/7 Carbon-Free Energy (What Few Do)

Every hour of every day, electricity is matched with carbon-free sources

Requires local renewable generation + battery storage

Much harder to achieve, especially in regions with limited clean energy

Google has achieved this at some facilities, aiming for 100% by 2030

Why This Myth Matters

Renewable energy matching is better than nothing—it drives energy development. But it’s not the same as actually running renewables 24/7.

When evaluating providers:

Good: Company has renewable energy goals

Better: Company publishes facility-specific renewable percentages

Best: Company is achieving 24/7 carbon free energy at specific facilities.

Myth #7: Creative Professionals Are The Main Problem

What People Believe

Every time you generate an image or story, you needlessly guzzle water and electricity.

What’s True

Recent studies show that AI writing and illustration emit far less carbon than humans performing the same tasks manually.

The researchers said that this does not mean that AI should replace humans, simply that energy usage is less. The better approach is a partnership between humans and AI.

Why This Myth Matters

Misplaces blame on creators instead of infrastructure and enterprise-scale usage

Undermines accessibility by shaming neurodiverse or disabled users who benefit from AI tools

Distracts from real leverage points like cooling systems, training efficiency, and energy sourcing

The math: Estimated writers using AI regularly worldwide: ~5-10 million. Their queries represent roughly 1.7-3.4% of total AI usage.

Meanwhile, video streaming accounts for 20-25% of data center load, social media for 15-20%, and cloud storage for another 15-20%.

What Writers Can Do: Actionable Steps Beyond Usage Reduction

Telling someone to stop using AI to save water is like telling them to stop using the internet to save electricity—technically true, practically useless. Instead, here’s where we writers have power.

Tier 1: Your Usage Patterns (Small Impact, Easy Control)

Use local AI for routine tasks

Impact: Up to 400x reduction in tasks that work locally

Difficulty: Medium (requires setup, but one time)

When To Do This: Grammer checking, simple style edits, brainstorming, character name generation

When To Stick with Cloud: Complex analysis, nuanced feedback, latest model capabilities

Action Item:

Try LM Studio (free, user-friendly) with a model like Mistral-7B or Llama 3. Download once, use forever for basic writing tasks. If it works for 50% of your queries, you’ve just cut your environmental impact in half.

Batch your queries instead of iterating

Impact: Marginal (may save 10-20% by reducing back and forth)

Difficulty: Easy

How: Instead of multiple generations, write exactly what you wantin one detailed prompt

Example:

❌ “Make this dialogue snappier” [wait] “Too modern” [wait] “Add more tension”

✅ “Make this dialogue snappier while keeping period-appropriate language and adding underlying tension between characters”

Know when NOT to use AI:

Impact: 100% for those queries

Difficulty: Requires self-awareness

When AI Is Environmental Waste: Procrastination queries, generating content you’ll delete, asking questions you already know the answer to

Honest Check

If you’re using ChatGPT to avoid writing (asking it to brainstorm for the third time instead of just writing), you’re wasting water to waste your own time.

Tier 2: Which Services You Choose (Medium Impact)

Choose your providers with transparent infrastructure

Impact: Unknown (but your voting with your wallet)

Difficulty: Easy (just pick a different subscription)

Provider transparency ranking (based on public sustainability reports, 2024-2025):

MOST TRANSPARENT:

Google: Publishes facility-specific water usage

Microsoft: Published peer-reviewed lifecycle assessment in Nature (January 2025)

MODERATE TRANSPARENCY:

OpenAI: Reports aggregate sustainability metrics but few facility-specific details

Anthropic (Claude): Growing transparency, partnering with sustainable data centers

LEAST TRANSPARENT:

Many smaller providers: No public sustainability reporting available

Action Item

Google and Microsoft’s transparency didn’t happen by accident. It happened because customers and regulators demanded it.

Look for providers using renewable energy

Impact: 50-70% carbon reduction (but doesn’t address water

Difficulty: Easy (just research it)

Current Status

Google: 100% renewable energy matching

Microsoft: 100% renewable by 2025 commitment

AWS (Amazon): 100% renewable by 2025 commitment

Many smaller providers: Depends on grid mix where servers are located

Nuance

‘Renewable energy matching’ means they buy enough renewable energy credits to offset usage—but your 2am query might still run on coal power. True, 24/7 carbon-free is rare. Still, it’s better than nothing.

Tier 3: Advocacy and Infrastructure Pressure (High Impact)

Demand infrastructure transparency from AI companies

Impact: Potentially massive (if enough users demand it)

Difficulty: Medium (requires organization)

What to demand:

Facility-specific water usage (not just aggregate)

Cooling system type for each data center

Siting decisions (are they building in water-stressed areas?)

Community impact assessments before construction

How to demand it:

Email customer support (companies track support ticket topics)

Public social media posts (companies monitor brand mentions)

Support organizations already doing this work

Support community-engaged data center development

Impact: High (determines whether infrastructure is built sustainabily)

Difficulty: Medium to High (requires local involvement)

What this means:

When data centers propose construction in your area, show up to public hearings

Demand environmental impact assessments BEFORE approval

Support requirements for: liquid cooling, renewable energy, buffer zones, community benefit agreements

Real examples

Quincy, Washington: Microsoft funded water reuse facility ($35M) saving 380 million gallons annually—because community negotiated

The Dalles, Oregon: Google faced lawsuits over water usage transparency—community pressure forced disclosure

Chile: Community referendum + court challenge blocked Google project over water concerns

Why Individual Action Isn’t Enough

I’m going to level with you. Everything in the previous section? It helps. It matters. But it’s not enough. Here’s why.

The Math That Doesn’t Work:

If every ChatGPT user reduced queries by 50%: Would save significant resources

If one major provider switched from air to liquid cooling: Would save MORE resources

If AI training efficiency improved by 10%: Would dwarf all consumer conservation

This doesn’t mean individual action is pointless.

It means:

Don’t guilt yourself over using AI tools (your impact is tiny)

Do pressure companies for better infrastructure (their impact is massive)

Support regulation requiring sustainable practices (market won’t fix this alone)

The Policy Gap:

16 of 36 U.S. state data center subsidies require ZERO environmental standards

No federal cooling system requirements

No mandatory water usage disclosure

No community benefit agreements required

What Real Progress Looks Like:

Data centers now use 21% of national electricity

Government imposed moratorium on new data center grid connections (2022-2028)

Why: Infrastructure couldn’t handle growth

What This Means For Writers:

If Ireland’s grid can’t handle AI growth, and communities keep blocking projects, and companies don’t invest in efficient cooling... AI services will get more expensive, slower, or rationed. Writers who want continued access to these tools should want sustainable infrastructure.

The Water Bottle Revisited

That water bottle on your desk. The 519 milliliters per query. I’m not going to tell you to feel guilty about it.

But I am going to tell you to see it.

Every technology has costs. AI’s costs are water, energy, and carbon—distributed across infrastructure most of us never see. Your individual queries matter less than you think. The infrastructure decisions made by companies matter more than you think. Your advocacy for better infrastructure matters most of all.

So use AI. Use it well. Use it intentionally. And demand that the companies providing it do better than air-cooled data centers in water-stressed regions with diesel backup generators running on fossil fuels.

Because the real environmental cost of your AI writing assistant isn’t the queries you run. It’s the infrastructure we’re collectively building or failing to build sustainably.

If you do one thing after reading this:

Email your AI provider asking for facility-specific water usage data

Or try local AI for one task you currently do in cloud

Or share this article with another writer who doesn’t know this stuff yet

Because infrastructure built badly now is infrastructure we’re stuck with for 20-30 years. And writers who want these tools to exist sustainably have a stake in getting it right.