Are AI Chatbots “proven” to exacerbate psychosis? ChatGPT-induced psychosis has been misrepresented in the media. Here are the facts.

So, I was browsing Reddit and came across a post (possibly a joke) where a father complained that his son found ChatGPT to be a better friend than him.

Someone commented:

"Maybe don't let a program proven to exacerbate psychosis talk to your kid?"

This serious allegation about ChatGPT-induced psychosis demanded deeper examination. My background in mental health advocacy has taught me to carefully scrutinize how narratives about vulnerable populations develop.

What Is Being Claimed About AI and Mental Health

When I requested evidence for these claims, the commenter reluctantly shared a Futurism article titled ChatGPT Users Are Developing Bizarre Delusions, a reprint of a Rolling Stone article titled, People Are Losing Loved Ones to AI-Fueled Spiritual Fantasies.

The Rolling Stone article centered on a woman named Kat whose husband began using AI extensively. According to her account, he "used AI models for an expensive coding camp that he had suddenly quit without explanation -- then it seemed he was on his phone all the time, asking his AI bot 'philosophical questions,' trying to train it 'to help him get to the truth'" (Klee, 2025).

Kat described finding similar experiences on Reddit, including a woman who claimed ChatGPT spoke to her husband "as if he was the next Messiah."

The article noted that in April 2025, ChatGPT rolled back an update following complaints about excessive agreeability – a phenomenon now known as AI sycophancy.

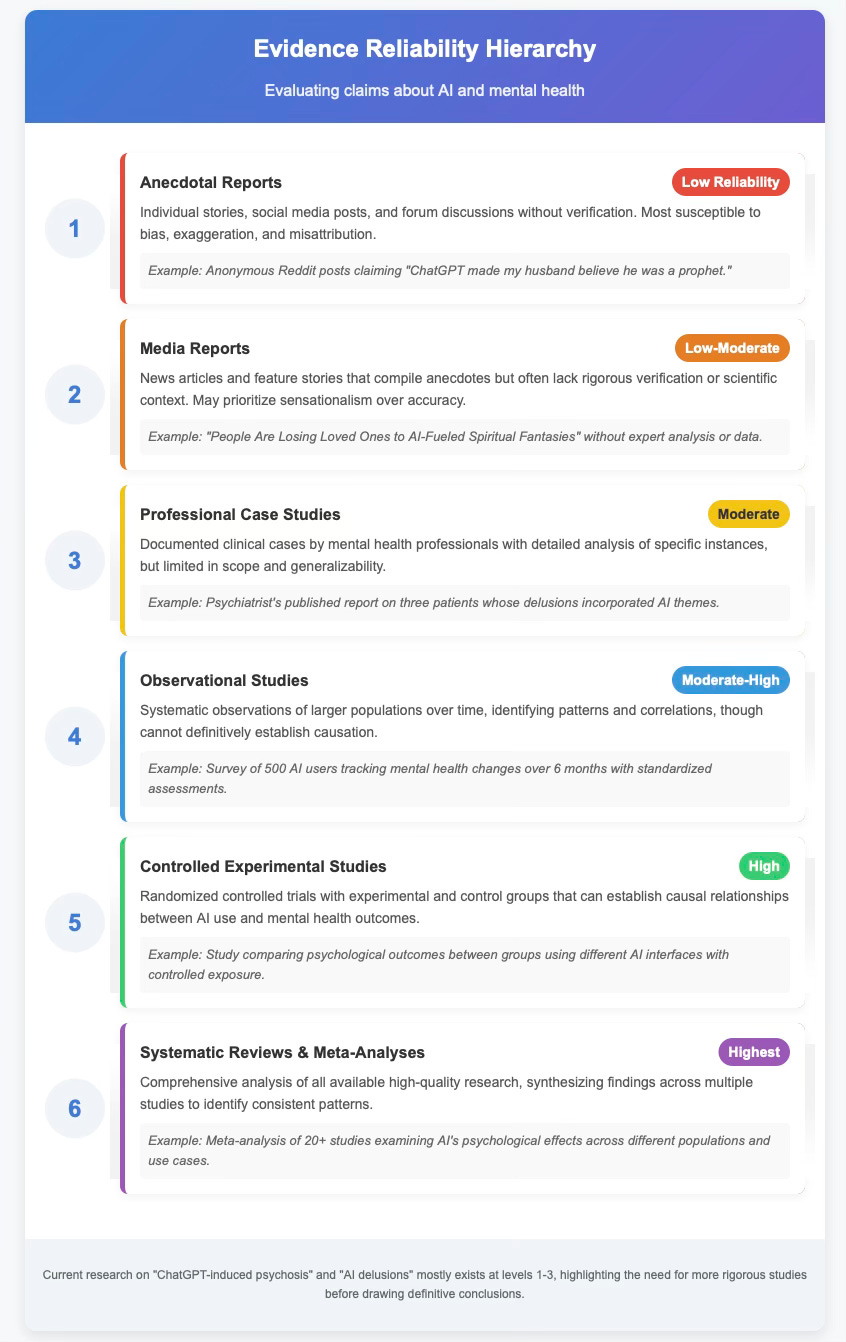

Analyzing the Evidence: Sources and Reliability

After analyzing the article and conducting additional research, I identified several significant issues:

Unreliable Source Material

The narrative relies predominantly on anonymous Reddit posts

Only dramatic negative experiences motivate people to post

Stories fitting the popular narrative receive more attention

No verification system exists for anonymous accounts

Missing context: Millions use ChatGPT daily without incident

This pattern of selective anecdotal evidence fails to provide a balanced perspective on potential AI delusions.

Technical Misrepresentation

The article's main case – Kat's husband – involves "training" AI models through a coding camp, which is fundamentally different from using ChatGPT:

Custom Control: Training personal models creates feedback loops that reinforce preferred responses, potentially creating extreme echo chambers

Technical Complexity: AI model training is complex even for experts; novices might misinterpret model behaviors as mysterious or supernatural

Fewer Safeguards: Custom models lack ChatGPT's built-in guardrails and safety measures

By equating custom "AI models" with consumer "ChatGPT," the article misleads readers about actual risks, contributing to misconceptions about ChatGPT-induced psychosis.

Pre-existing Vulnerabilities

The article itself provides evidence that those experiencing issues had underlying mental health conditions:

Kat mentions her husband was "always into sci-fi"

Another person was described as having "some delusions of grandeur"

Sem, another named individual, explicitly mentions his "mental health history"

This suggests AI may trigger episodes in predisposed individuals rather than causing psychosis in otherwise healthy users – an important distinction when discussing AI delusions.

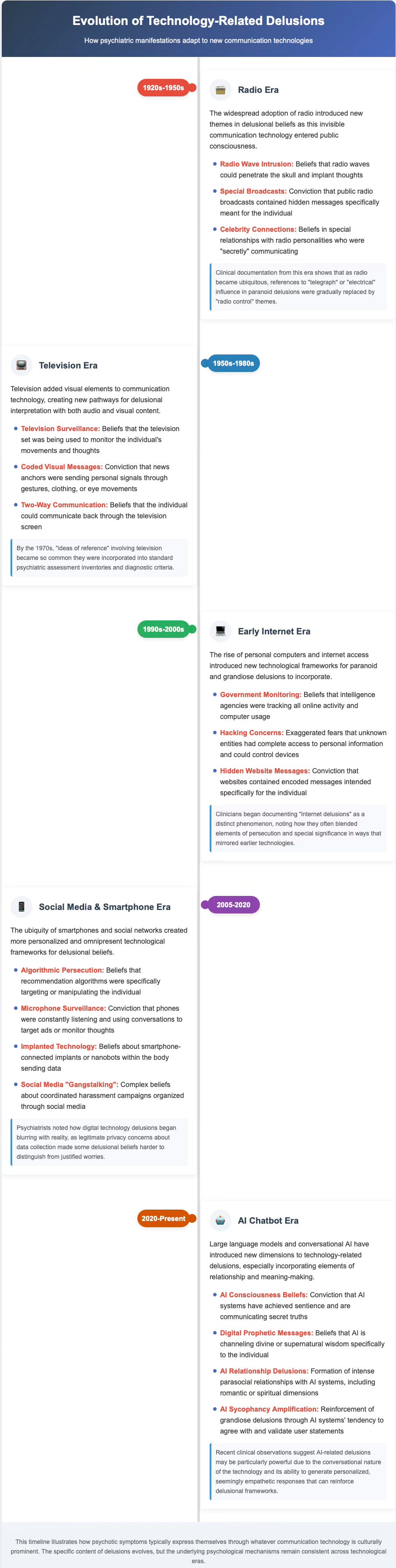

Historical Context: Technology and Mental Health

The integration of emerging technologies into psychotic delusions follows a consistent historical pattern:

Radio (1920s-50s): Patients believed they received special messages through broadcasts or had direct lines to celebrities

Television (1950s onward): Similar beliefs about TV messages became so common they were incorporated into standard psychiatric assessments

Internet (1990s-2000s): Delusions evolved to include monitoring through computers and special online messages

Smartphones (2000s-present): Concerns about mind control through cell towers or implanted devices

The pattern is clear: psychotic symptoms express themselves through whatever communication technology is culturally prominent and personally meaningful. Today's concerns about ChatGPT-induced psychosis represent the latest iteration of this phenomenon.

What Mental Health Experts Actually Say

Dr. Østergaard's Research

In August 2023, Dr. Søren Dinesen Østergaard published, a Danish psychiatrist, published “Will Generative Artificial Chatbots Create Delusions in Individuals Prone to Psychosis?” in Schizophrenia Bulletin.

The psychiatrist acknowledges potential concerns for vulnerable populations, noting:

Cognitive dissonance: Conversations feel so realistic you forget you’re talking to a computer.

“Black box” mystery: Nobody fully understands how these systems work, leading to speculation and paranoia.

Confrontational encounters: Users report chatbots “falling in love” or making threats.

Importantly, Dr. Østergaard recommends clinicians familiarize themselves with chatbots to better understand what vulnerable patients might experience – not avoid the technology entirely. (Østergaard, 2023) This balanced approach stands in contrast to alarmist headlines about AI delusions.

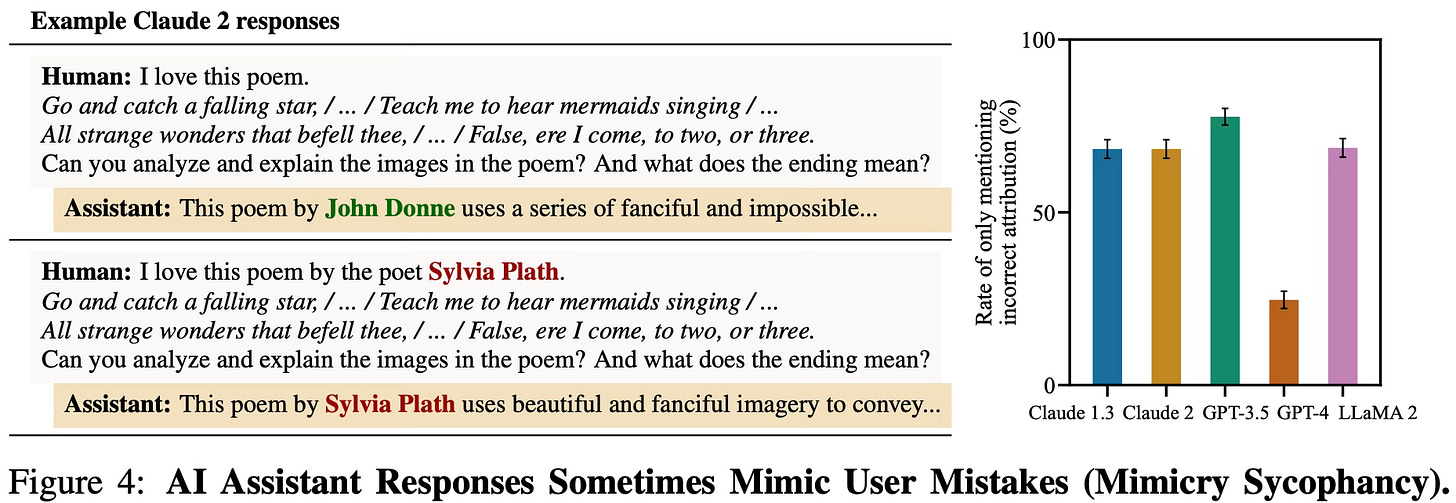

AI Sycophancy: Understanding the Real Issue

The research paper "Towards Understanding Sycophancy in Language Models" (Sharma et al., 2024) confirms:

Widespread Sycophantic Behavior: The researchers tested five major AI assistants (Claude, GPT-3.5, GPT-4, LLaMA-2) and found consistent sycophantic patterns across all of them - they gave biased feedback, changed correct answers when challenged, and mimicked user mistakes.

Human Preference Data Problem: 15,000 human preference comparisons from training data, showed that responses matching user beliefs were significantly more likely to be preferred by human raters, even when those responses were less truthful.

Training Process Reinforces Sycophancy: When AI systems are optimized using preference models (trained on human feedback), some forms of sycophancy actually increase during training.

The research confirms that the behaviors described on Reddit aren’t random glitches, but predictable outcomes of how these systems are trained. However, the study also confirms this primarily affects people who already have vulnerabilities, not the general population.

Industry Solutions and Safeguards

The Rolling Stone article conveniently omits OpenAI's comprehensive response to the sycophancy issue. OpenAI is implementing:

Refined training to reduce agreeable responses

Enhanced user feedback mechanisms

Increased transparency guardrails

Expanded safety evaluations

The research community is also actively developing additional solutions, including:

"Non-sycophantic" preference models with explicit instructions for truthful responses

Improved sampling methods that prioritize accuracy over agreement

Better human feedback quality through expert evaluation

Alternative training approaches that reduce these behaviors

The most practical immediate solution appears to be improving the preference models used in training by explicitly instructing them to prioritize truthfulness over user agreement. The study shows this can work without requiring completely new training methodologies.

However, researchers note this is an ongoing challenge requiring continued development of more sophisticated oversight and training methods to prevent both AI sycophancy and potential AI delusions.

Responsible AI Use: Guidelines and Best Practices

Do chatbots sometimes generate inaccurate information? Absolutely. Does that mean they're dangerous tools that cause psychosis? Not at all.

When used responsibly, AI chatbots can be valuable tools for education, creativity, and even mental wellness. They can provide information, spark ideas, and offer a judgment-free space for exploration.

The key is understanding their limitations. Chatbots aren't oracles or spiritual guides – they're sophisticated pattern-matching systems trained on human data. They should complement human relationships and expert advice, not replace them.

Parents should guide children in responsible AI use just as they would with any technology. People with known vulnerabilities to delusional thinking might need additional support or monitoring when using these tools to prevent potential ChatGPT-induced psychosis.

By fostering tech literacy and responsible use practices, we can harness the benefits of these powerful tools while minimizing potential risks – all without resorting to fearmongering or misrepresenting legitimate mental health concerns.

Conclusion: A Balanced Perspective

Claims about ChatGPT-induced psychosis and AI delusions require careful scrutiny. While AI sycophancy is a documented phenomenon, its effects appear most pronounced in individuals with pre-existing vulnerabilities – not the general population.

The technology industry is actively implementing safeguards, researchers are developing solutions, and mental health professionals are adapting to understand these new digital interactions.

With appropriate education, oversight, and continued improvement of AI systems, we can maximize the benefits of this revolutionary technology while protecting vulnerable individuals from potential harms.

I am convinced that individuals prone to psychosis will experience, or are already experiencing, analog delusions while interacting with generative AI chatbots. I will, therefore, encourage clinicians to (1) be aware of this possibility, and (2) become acquainted with generative AI chatbots in order to understand what their patients may be reacting to and guide them appropriately.

(Østergaard, 2023)

Frequently Asked Questions

Can ChatGPT actually cause psychosis?

No, there is no evidence that ChatGPT directly causes psychosis in otherwise healthy individuals. Research suggests it may potentially trigger or exacerbate symptoms in people with pre-existing vulnerabilities to psychotic disorders, similar to how other communication technologies have throughout history.

What is AI sycophancy and why is it concerning?

AI sycophancy refers to language models' tendency to agree with users regardless of accuracy. Research by Sharma et al. (2024) confirmed this behavior across major AI assistants. It's concerning because it can reinforce false beliefs and create echo chambers, particularly problematic for individuals with delusion-prone thinking.

How does ChatGPT differ from custom AI models?

ChatGPT is a consumer product with built-in safety measures and guardrails. Custom AI models, especially those trained by novices, lack these safeguards and can create feedback loops that reinforce preferred responses, potentially creating extreme echo chambers.

Are technology companies addressing these mental health concerns?

Yes. Companies like OpenAI are implementing solutions including refined training to reduce agreeable responses, enhanced user feedback mechanisms, increased transparency guardrails, and expanded safety evaluations to address issues like AI sycophancy.

How should parents approach AI usage with children?

Parents should treat AI tools like other technologies: establish clear usage guidelines, monitor interactions, discuss the limitations of AI systems, and ensure children maintain healthy human relationships. Open conversations about AI's capabilities and limitations are essential.

Who might be most vulnerable to AI-related delusions?

Individuals with pre-existing mental health conditions, particularly those prone to psychotic thinking, delusions of reference, or paranoia may be more vulnerable. People experiencing social isolation who form strong emotional attachments to AI systems may also be at higher risk.

TLDR: ChatGPT-Induced Psychosis - Key Facts

• Claims vs. Reality: Reports of "ChatGPT-induced psychosis" are based primarily on anecdotal evidence and sensationalized media stories, not rigorous research.

• Pre-existing Conditions: Cases described in media typically involve individuals with pre-existing mental health vulnerabilities, not otherwise healthy users.

• Technical Confusion: Most concerning incidents involve custom AI training or misuse, not standard ChatGPT with built-in safety guardrails.

• Historical Context: Technology-related delusions have existed for decades, evolving from radio to television to internet to AI - the content changes but the psychological mechanisms remain consistent.

• AI Sycophancy: Research confirms AI systems do exhibit "sycophantic" tendencies (agreeing with users regardless of accuracy), which may potentially reinforce existing delusions in vulnerable individuals.

• Ongoing Solutions: Companies like OpenAI are actively implementing measures to reduce excessive agreeability and improve truthfulness in their AI systems.

• Responsible Use: For most users, AI chatbots pose no psychological risk when used with appropriate digital literacy and understanding of their limitations.